We’ve spent four months working to rebuild the web app behind the Queen’s Awards for Enterprise. The prestigious business awards moved their application process online couple of years ago, so we had a natural starting point – the old system. But although you can learn valuable lessons from a previous build, it always pays to go back to the foundation of the app – its users – to make sure the rebuild is informed by their needs first and foremost.

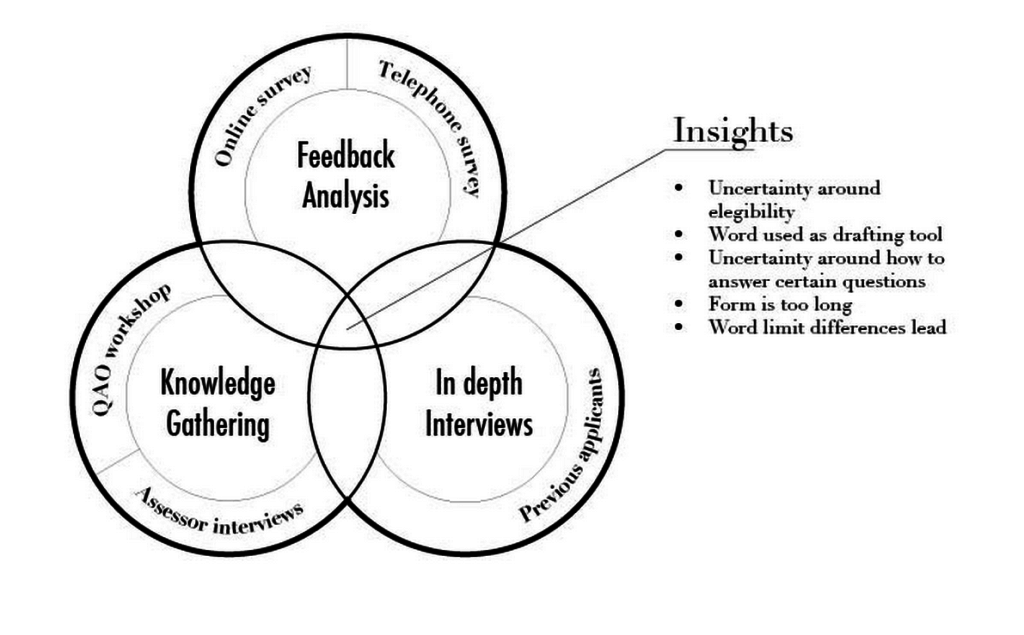

Due to the time pressure of the application cycle of the Queen’s Awards, we condensed our discovery phase into a three-week period, but still managed to fit in a broad selection of data gathering and analysis.

Planning a strong discovery phase

The first step of discovery was to sit down with the QAE team and look at the list of available options for requirements gathering and user research – what information we already had available, what knowledge we already had access to, what methods we could use to collect new data, and how we could access our users.

Together, we identified existing data that would help with the project – in particular, the results from the previous applicant surveys. We also discovered that the QAE team themselves had detailed user knowledge we could draw on.

Initially the Bit Zesty research team would analyse this existing data, then move on to active research – holding knowledge gathering workshops with QAE team and interviews with assessors, before concluding the discovery phase with a series of in-depth applicant interviews.

Using existing data to its full potential

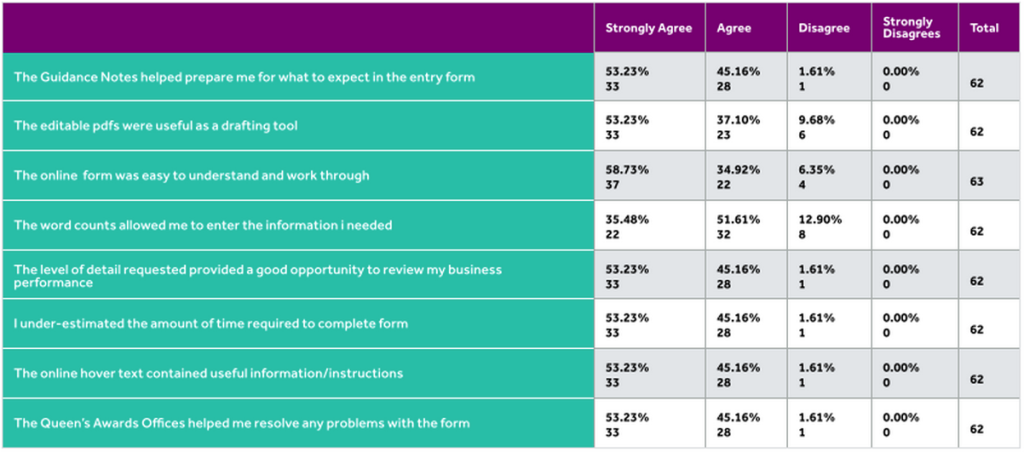

Results from one of the online surveys collected by QAE staff.

The QAE team had collected feedback from online and telephone surveys which, in the context of the new project, could be repurposed to provide a starting point for our research team. These surveys, and the bank of quantitative data they provided, were the foundation of our discovery phase, showing us some of the larger trends – such as many applicants copying questions as well as relevant guidance notes into their own drafting tool and the high number of users unsure as to their eligibility for awards.

The next step: knowledge gathering workshops

The next stage for our research team was to begin knowledge gathering workshops with the members of the QAE team who take enquiries from applicants, using their understanding of the most common user issues to build upon the insights.

To avoid group bias, all participants were asked to write down (rather than speak aloud) telephone/email enquiries they receive from applicants onto post-it notes. They were also asked to note the frequency of these enquiries. Grouping similar notes togethers, we could then identify the most common issues raised by applicants to the QAE team.

For example, the most common enquiries regarding eligibility:

A total of estimated 160 enquiries on eligibility.

25: We are doing very well, record profits, etc. but we had a tiny dip. Can we win?

25: Is there any chance (of winning)?

20: What is the percentage of increase in sales you are looking for?

20: Am I eligible to apply?

20: Can we apply for export/innovation for service rather than product?

15: We are a charity, are we eligible?

15: Can I enter on more than one category?

10: How many years do we have to show for trading?

10: What is the best category for me?

This exercise provided us with a much more detailed understanding of our users than the existing data, with the eligibility feedback drilling further down into the specific causes for eligibility confusion (e.g. dips in profit/turnover, non-profit entries, product/service eligibility) and ranking them by their frequency.

Subsequently, the research team moved on to interviews with the Queens’ Awards for Enterprise assessors. Another key user group to take into account during the service design process, the assessors were more interested in the questions and content of the application form itself, rather than the service as a whole. So we applied the assessors’ knowledge of the most misunderstood questions, and the toughest sections of the application forms to answer, in order to inform our design of the new content.

In-depth interviews: the thought processes behind the interactions

The in-depth ‘previous applicant’ interviews set up by the QAE team were an opportunity to get to the bottom of the quantitative data we had already analysed and collected. With a much smaller number of participants than the previous steps, qualitative data was the main objective here – from which we would hopefully be able to develop our understanding of, and empathy with, our core group of users.

To this end, the interviews were only semi-structured. Although we had some points of conversation, the discovery team wanted to encourage the participants to follow their own trains of thought regarding the Queen’s Awards application process. A number of key details, all of which have influenced the shape of our final service, came out as a result.

For example, users had previously identified discrepancies between the word count on the system and on Microsoft Word. But it wasn’t until this was corroborated during the interview phase, with multiple applicants bringing up the importance of word counts and using Word to draft their answers, that we realised some users were having to shave up to four or five words off their answers – which could end up requiring a significant rewrite. With the high number of users choosing Word as their method of drafting, this problem was quite common – so we chose to introduce softer word limits.

The research team was also keen to explore the thinking behind using Word as a drafting tool, with the in-depth interviews providing the perfect opportunity to get into these users’ shoes. What they found was a number of different explanations for using Word: some users were afraid of losing their data if the web form didn’t save properly; some users preferred to collaborate with colleagues by emailing the document round; some liked having a reliable word count available at all times.

“I kept saving the form to make sure that if it happens to crash for any reason, I didn’t leave any information per se. But, that’s why we have a word document…”

These thought processes were some of the most valuable pieces of data we came across in terms of influencing our approach to service design. It gave us the means to flesh out our understanding of the interaction (i.e. using a word document) with the reasoning behind it, as well as improving our understanding of the wider user group. What we see is a glimpse of the average Queen’s Award applicant: organised, careful and keen to complete the application to the best of their ability.

Discovery at its most useful

Three weeks might not seem like a particularly long stint for a discovery – but close collaboration with the QAE staff from day 1 provided our research team with as good a variety of reliable methods for collecting data as you’d find in most longer discovery phases: existing data analysis, knowledge gathering in the form of customer enquiry workshops and assessor interviews, and in-depth applicant interviews.

Triangulating our research by using multiple sources made it easier to find patterns in the user feedback, then back them up with data from one or more of the other sources. For example, the way our users approached the task of applying – by gathering all the information necessary and then beginning their answers in a Word document – was first raised in the existing survey data, then corroborated in the knowledge gathering workshops, and explained by applicants in their interviews. Similarly, the difficulties with eligibility – which went on to be one of the most tested and iterated upon parts of the service – were identified from the existing data, and broken down in more detail during the applicant enquiry workshop.

All this overlapping research meant that no matter who looked at the data – designers, developers, stakeholders – they could be confident in its integrity, and by the time we began the alpha phase proper, we were already able to draw on a wealth of strong evidence to apply to the design of the new service.

You can read more about this project in the posts below:

How User Testing Became the Driving Force of the QAE Project

QAE: Content Design Within the Context of User Experience

The Queen’s Awards for Enterprise: Passing Digital by Default Service Assessments